Table of Contents

VM internals

General

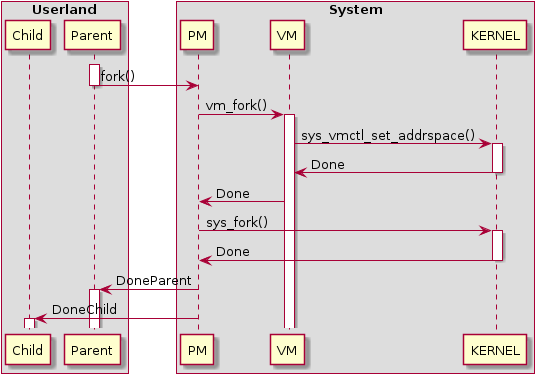

In order to encapsulate VM functionality, PM is split in process management and memory management. The memory management task is called VM and implements the memory part of the fork, exec, exit, etc calls, by being called synchronously by PM when those calls are done. This has made PM architecture independent.

A typical interaction between userland, PM and VM looks like this, for the

fork() call:

VM server

VM manages memory (keeping track of used and unused memory, assigning memory to processes, freeing it, ..).

There is a clear split between architecture dependent and independent code in VM. For i386 and ARM, there are intel page tables that reside in VM's address space. VM maps these into its own address space, by editing its own page table, so it can edit them directly.

Data structures

The most important data structures in VM describe the memory a process is using in detail. Page tables are written purely from these data structures. They are owned and manipulated by functions in region.c. They are:

Regions

A region, described by a 'struct vir_region' or region_t, is a contiguous range of virtual address space that has a particular type and some parameters. Its type determines its properties and behaviour (see 'memory types' later). It needn't have any real memory instantiated in it (yet). At any time, a region is of a fixed size in virtual address space. Some types can be resized when requested (typically the brk() call) though. Virtual regions have a staring address and a length, both page-aligned.

Virtual regions have a fixed-sized array of pointers to physical regions in them. Every entry represents a page-sized memory block. If non-NULL, that block is instantiated and points to a phys_region, describing the physical block of memory.

Physical regions

Physical regions, described by a 'struct phys_region,' exist to reference physical blocks. Physical blocks describe a physical page of memory. An extra level of indirection is needed because it is necessary to reference the same page of physical memory more than once, and keep a reference count of it to (efficiently) know when a page is referenced 0, once or more than once. 'blocks' here can be used interchangeably with pages.

Physical blocks

A physical block, described by a 'struct phys_block,' describes a single page of physical memory. It has the address, and a reference count.

Memory types

Each memory type is described by the data structure struct mem_type in memtype.h. They are instantiated in mem_*.c source files and declared in glo.h (mem_type_*). This is neatly abstracts different behaviour of different memory types when it comes to forking, pagefaulting, and so on, making the higher level data structures and code to manipulate them quite generic.

Cache

The in-VM disk block cache data structures and code to manipulate it is contained in cache.c. Each cache block is page-sized and is uniquely identified by a (device, device offset) pair. It furthermore has (inode, inode offset) as extra information but this is not guaranteed to be unique by VM, nor is it guaranteed to be present. the inode number might be VMC_NO_INODE, meaning the the disk block isn't part of inode data or its inode number isn't known (e.g. because it ended up in the cache through a block device and not through a file).

The block contents are a 'Physical block' pointer, and being in the cache counts as a 'reference' in its refcount.

Blocks are indexed by two hash tables: one, the (device, device offset) pair, and two, the (inode, inode offset) pair. A block is only present in the 2nd hashtable if it is in an inode at all (inode != VMC_NO_INODE).

Furthermore cache blocks are on an LRU chain to be used for eviction in out-of-memory conditions.

Typical call structure

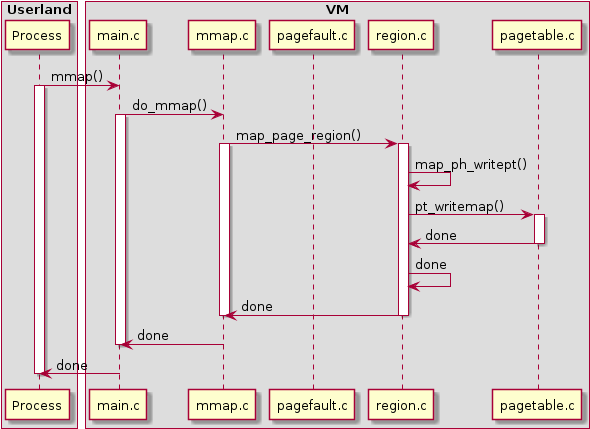

Calls into VM are received from 3 main sources: userland, PM and the kernel. In all cases, a typical flow of control is

- Receive message in main.c

- Do call-specific work in call-specific file, e.g. mmap.c, cache.c

- This manipulates high-level data structures by invoking functions in region.c

- This updates the process pagetable by invoking functions in pagetable.c

An example is mmap, when just used to allocate memory:

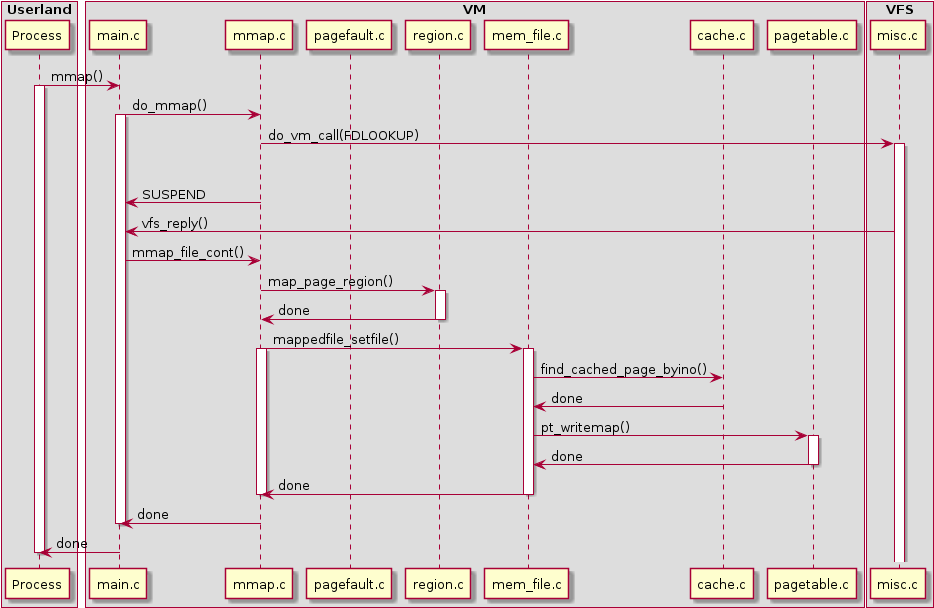

A more complicated example is where mmap is used to map in a file. VM must know the corresponding device and inode number, and does a lookup on the FD by calling VFS asynchronously to do so:

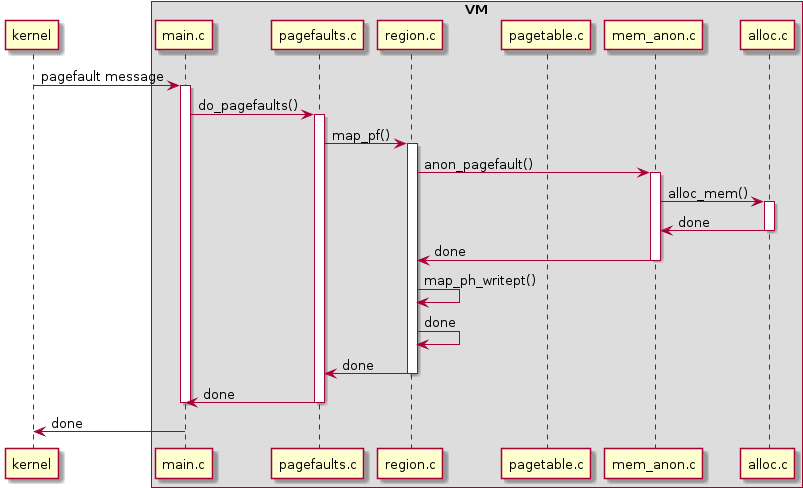

Handling absent memory: pagefaults, memory handling: calls from the kernel

There are two major cases in which memory is needed that can't be used directly:

- memory in a range that is mapped logically, but not physically (currently that is on-demand anonymous memory)

- memory that is mapped physically, but readonly as it's mapped in more than once (shared between processes that have forked), and so can't be written to directly.

VM makes sure the page is mapped readonly in the second case. There is no page table entry in the first case.

There are two major situations in which either of these cases can arise:

- a process uses the memory itself (page fault)

- the kernel wants to use that memory

In both cases the 'call' is generated by the kernel and arrives in VM through a 'kernel signal' in the form of a message.

The kernel must check for these cases whenever it wants to touch memory; e.g. in IPC but also in copying memory to/from processes in kernel context. If the kernel detects this, it stores this event, notifies VM, doesn't reply to the requester yet, and continues its event loop. VM then handles the situation (specifically, mapping in a copy of the page, or an entirely new page, as the case may be) and sends a message to the kernel.

Pagefaults are memory-type specific. How a pagefault in anonymous memory might look:

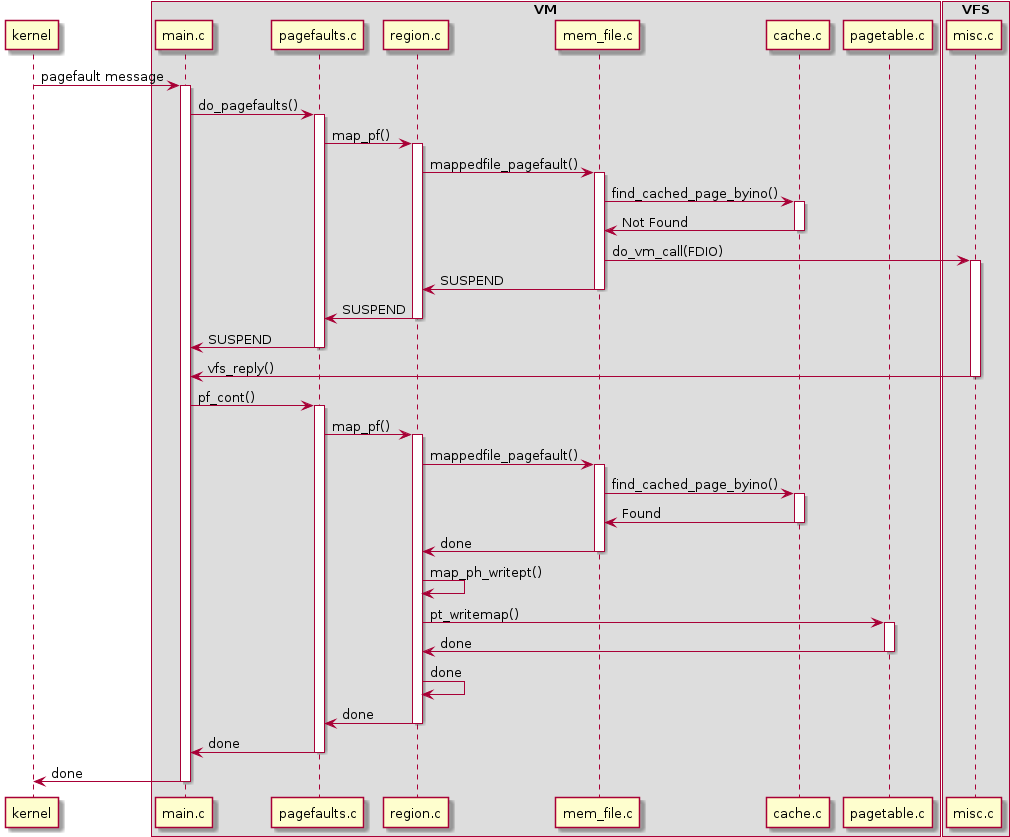

If a pagefault is in a file-mapped region, the cache is queried for the presence of the right block. If it isn't there, a request to VFS will have to happen asynchronously for the block to appear in the cache. Once VFS indicates the request is complete, the pagefault code is simply re-invoked the same way.

Physical / contiguous memory

Many areas in the system, inside and outside the kernel, assume memory that is contiguous in the virtual address space is also contiguous in physical memory, but this assumption is no longer true. Therefore all instances of umap calls in the kernel had to be checked to see

- whether an extra lookup had to be done to get the real physical address

- whether that code assumes the memory is contiguous physically, and the memory is present even

Processes that need physically contiguous memory specifically have to ask for it. A warning in the kernel is printed if an old umap function is called. A new umap segment (VM_D as opposed to D) was added that does a physically-contiguous check, but doesn't print a warning (the VM_D is meant to indicate that the caller is aware that memory isn't automatically contiguous physically, and that if it wants it to be, it has made arrangements for that itself, e.g. use alloc_contig()).

Drivers

Drivers have been updated to

- Request contiguous memory if necessary (DMA)

- Request it below 16MB physical memory (DMA; lance and floppy) or below 1MB physical memory (BIOS driver)